Two distinct functions needs to be adressed for Security Operations teams when we talk about logging and alerting.

At this point in time, Microsoft Sentinel is undisputedly the preferred Security Alert management product. It provides broad capabilities over correlating real-time alerts and integrating alerting with SOAR capabilities. There are many positive things to call out about Sentinel as a SIEM. The other aspect of modern security is hunting and this cability was never going to be a core capability of Sentinel.

We are all well versed in realising that significant incursions wont be discovered for a year or longer. We know that with the sophistication of cyber attacks, SOC teams need enormous quantities of data preserved for extensive periods. This is recognised by the expectations of M-21-31 - Improving the Federal Government’s Investigative and Remediation Capabilities Related to Cybersecurity Incidents. Storing hundreds of Terrabytes or Petabytes in Log Analytics for years isn't a technical option and the costs would be on par with the GDP of a small nation! The only Microsoft technology that meets this security need is Azure Data Explorer - and it's spectacular in this role.

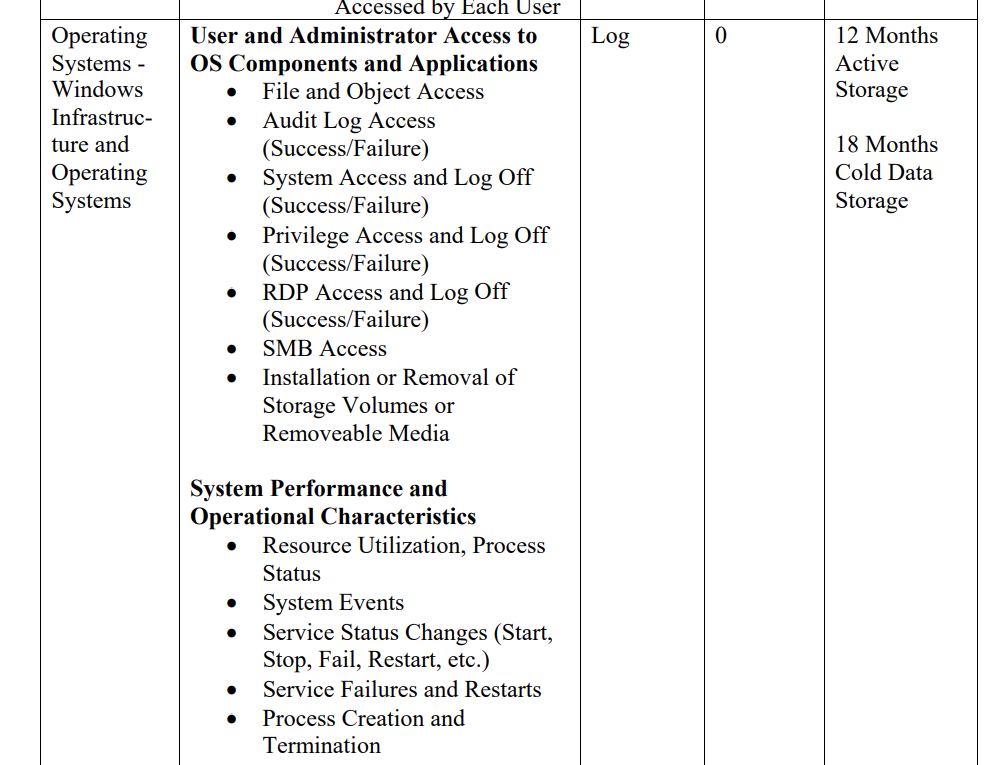

If we review the expectations of M-21-31 for log collection and storage for Windows Operating Systems, we have to effectively store all events from Windows servers in a searchable state for 12 months and retain them for at least a further 18 months.

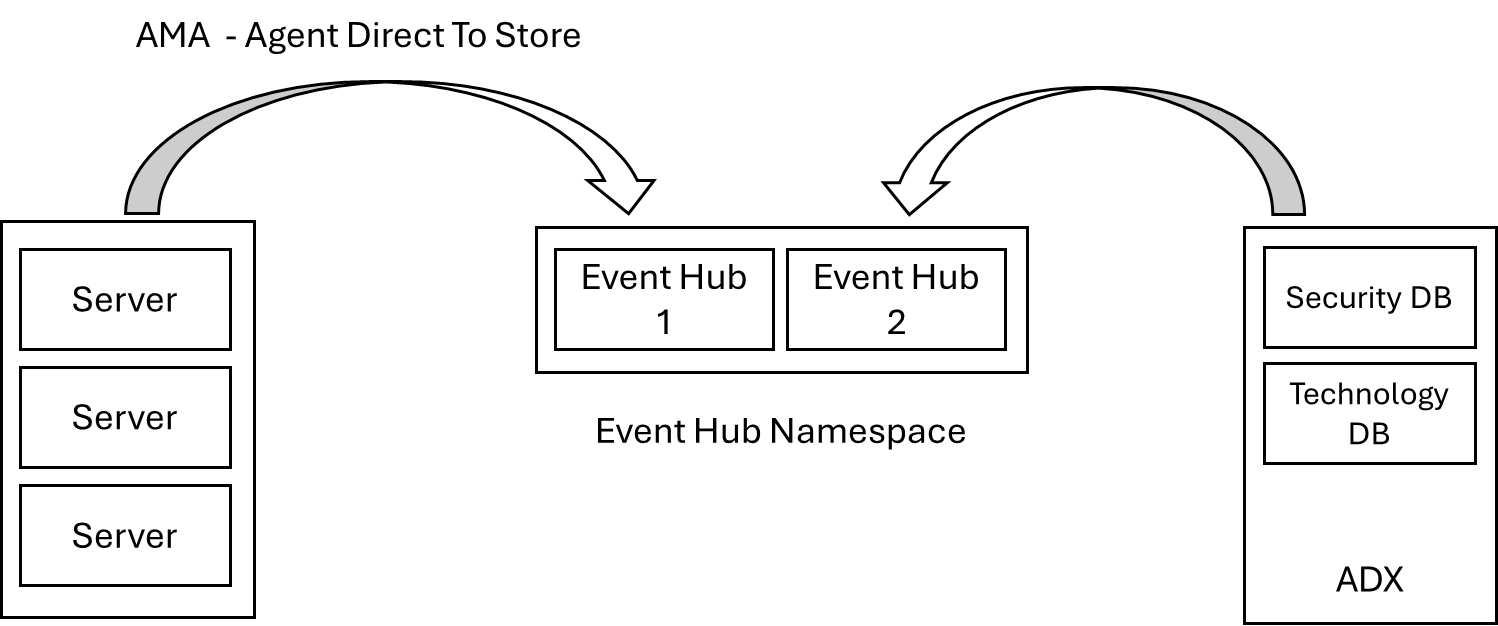

Not a lot of people realise there is already a Microsoft pattern for doing this that is extremely cost effective using the Azure Monitor Agent, Event Hubs and Azure Data Explorer. There are limitations with this solution that I'll discuss but I wanted to provide a demonstration on how to impliment the "official" Microsoft approach for dealing with this problem.

Solution Components

Our solution will be dependent on a number of standard components from the Microsoft stack.

- Servers will be installed with the AMA client, using the Preview version of 'Direct to Store' Data Collection Rules.

- We need and Event Hub namespace. This example will use a single Event Hub. Due to the volume of logs in an Enterprise environment, it's likely that a Premium SKU deployment of the Event Hub namespace will be a necessity.

- Event data will be transformed using KQL into Azure Data Explorer.

AMA Client Install

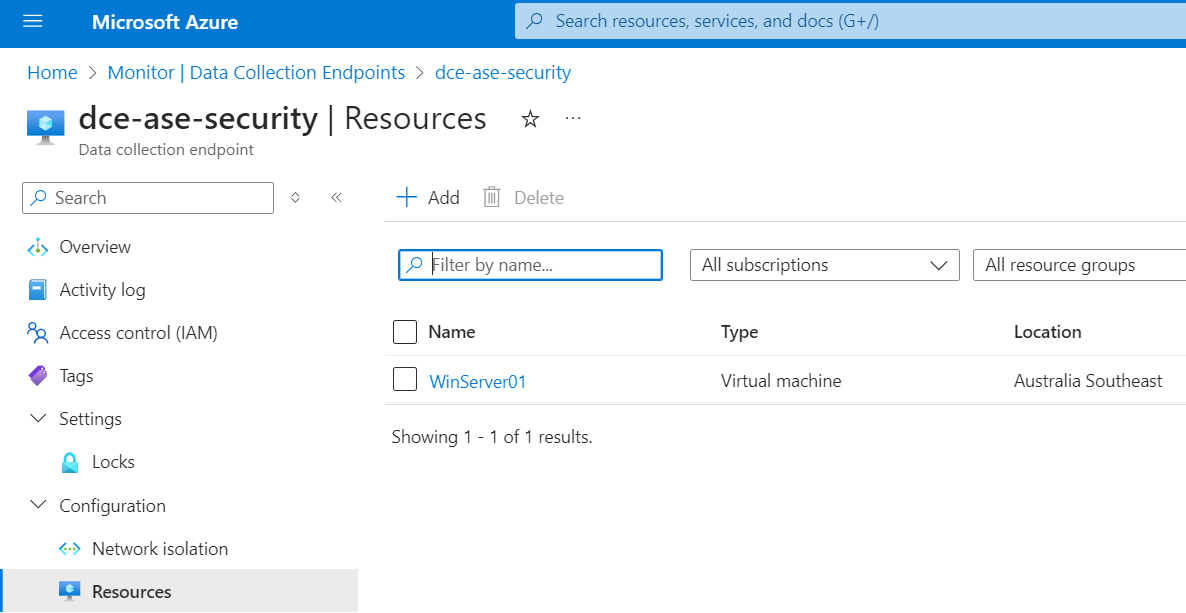

Most environments will have the new AMA client installed on all Azure machines already. Because I'm using a sandpit, I'll associate my server with a Data Collection Endpoint to ensure that the client is install on the machine.

'Direct to Store' Data Collection Rule

If you haven't already, have a look at the previous blog on Adding data streams to Azure Data Explorer as a background. I'll presume that you have created an Event Hub for storing the incoming events and provided permissions for the ADX cluster to retreive data from the Event Hub namespace.

I am going to create a Direct to Store Data Collection Rule using Bicep. The Bicep code will accept the Event Hub Id of the Event Hub you created as a parameter. Note that sending performance data, as required by M-21-31 is also possible using 'Direct-to-Store' but for my demonstration I'm only going to focus on Event logs.

@description('The location of the resources')

param location string

@description('The Event Hub Id used for Windows Logs')

param eventHubResourceId string

resource dataCollectionRule 'Microsoft.Insights/dataCollectionRules@2022-06-01' = {

name: 'WindowsEvent-write-to-EventHub'

location: location

kind: 'AgentDirectToStore'

properties: {

streamDeclarations: {}

dataSources: {

windowsEventLogs: [

{

streams: [

'Microsoft-Event'

]

xPathQueries: [

'Application!*[System[(Level=1 or Level=2 or Level=3 or Level=4 or Level=0 or Level=5)]]'

'System!*[System[(Level=1 or Level=2 or Level=3 or Level=4 or Level=5)]]'

'Security!*[System[(Level=1 or Level=2 or Level=3 or Level=4 or Level=0 or Level=5)]]'

]

name: 'eventLogsDataSource'

}

]

}

destinations: {

eventHubsDirect: [

{

eventHubResourceId: eventHubResourceId

name: 'EventHub-WindowsEvent'

}

]

}

dataFlows: [

{

streams: [

'Microsoft-Event'

]

destinations: [

'EventHub-WindowsEvent'

]

}

]

}

}Using Azure Monitor to send data to Event Hubs is a Preview service which has some significant limitations, primarility of which is that it can only be used with servers hosted in Azure. A complete list of limitations may be read here.

As this preview service isn't central to the use of Azure Monitor you will have issues trying to associate it with machines through the portal. It's a little cumbersome with Bicep too so I'm going to use one of my custom powershell functions (Push-AzureObject) to create the association using REST. The actual association object is:

{

"id": "/subscriptions/XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXX/resourcegroups/rg-test/providers/Microsoft.Compute/virtualMachines/<SERVER NAME>/providers/Microsoft.Insights/dataCollectionRuleAssociations/WindowsEventstoADX",

"properties": {

"description": "Association of Windows Events to ADX",

"dataCollectionRuleId": "/subscriptions/XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXX/resourceGroups/rg-monitor/providers/Microsoft.Insights/dataCollectionRules/WindowsEvent-write-to-EventHub"

}

}With the association object customised to point to my newly created data collection rule - and the Id of the server I want to monitor, I'll just read the JSON file into a Hash table and push it directly to Azure:

#Get an Authorised Header

$authHeader = Get-Header -scope "azure" -Tenant "laurierhodes.info" -AppId "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX" `

-secret $secret

# Retrieve an up to date list of namespace versions (once per session)

if (!$AzAPIVersions){$AzAPIVersions = Get-AzureAPIVersions -header $authHeader -SubscriptionID "XXXXXXXXXXXXXXXXXXXXXXXXXXXX"}

$file = "C:\temp\dcr-association.json"

Get-jsonfile -Path $file | Push-Azureobject -authHeader $authHeader -apiversions $AzAPIVersions

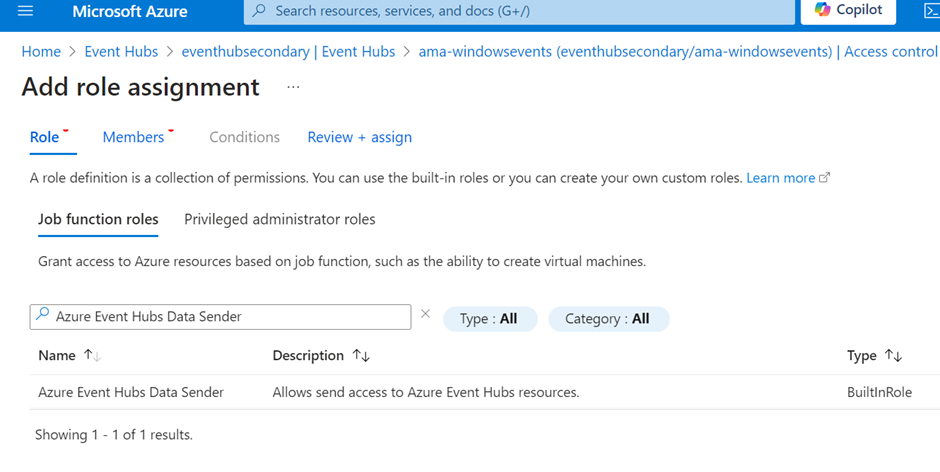

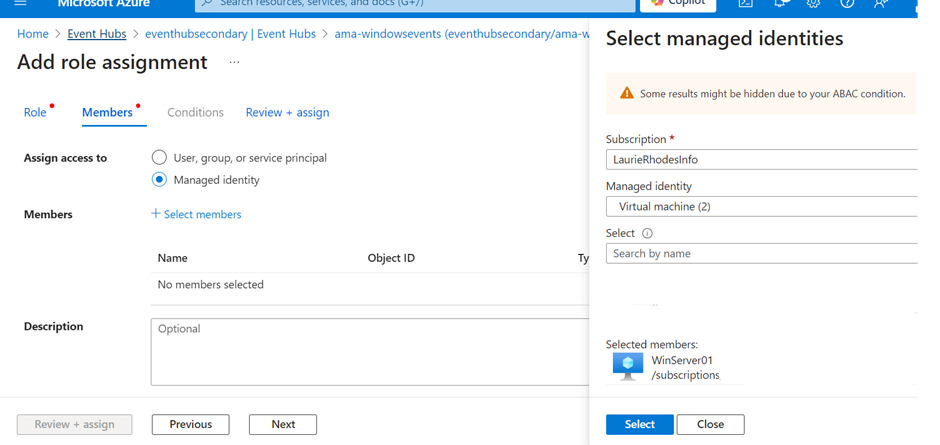

Event Hub Permissions

As with the previous blog, the ADX cluster does need the Azure Event Hub Data Reader permission assigned to the namespace but in addition, Azure hosted servers also need the Azure Event Hubs Data Sender permission to be enabled on the Event Hub you are using to collect their events. I'm going to assign this to my machine now.

I should now start receiving data streams into my event hub and I can validate the objects being sent. Details on how to do this are in the previous blog on Adding data streams to Azure Data Explorer. That blog also covers the details on creating the ADX tables and functions that I will do next.

ADX Table Creation

I will start by creating an 'Event' table based on the Log Analytics schema using the function from this previous post.

.create-merge table EventRaw (records:dynamic)

.create-or-alter table EventRaw ingestion json mapping 'EventRawMapping' '[{"column":"records","Properties":{"path":"$.records"}}]'

.create-merge table Event (

TenantId: string,

SourceSystem: string,

TimeGenerated: datetime,

Timestamp: datetime,

Source: string,

EventLog: string,

Computer: string,

EventLevel: int,

EventLevelName: string,

ParameterXml: string,

EventData: string,

EventID: int,

RenderedDescription: string,

AzureDeploymentID: string,

Role: string,

EventCategory: int,

UserName: string,

Message: string,

MG: string,

ManagementGroupName: string,

Type: string,

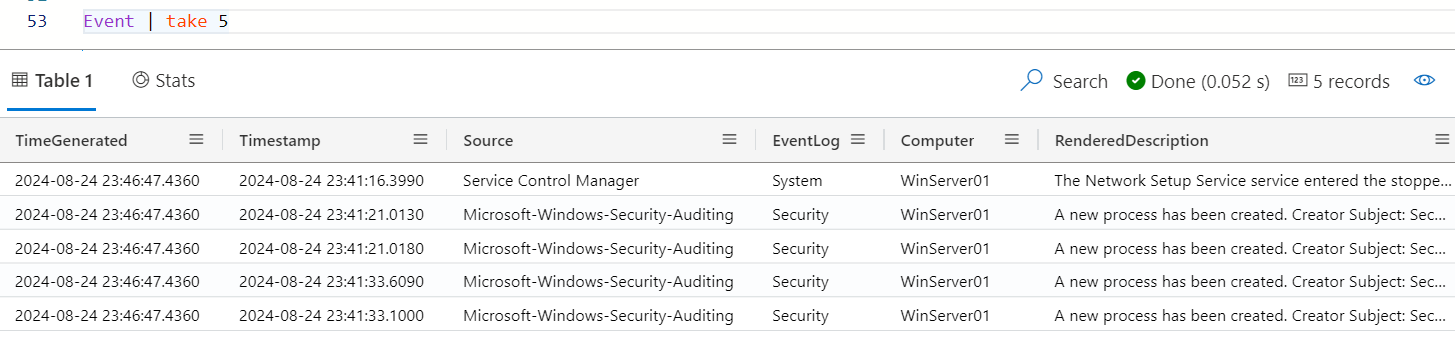

_ResourceId: string)I am amending the official schema to include a Timestamp field. With Log Analytics, TimeGenerated is the time the event was received into the system, not the time the event occurred. As my data arrives into ADX I will treat TimeGenerated the same way and preserve the actual event time in a Timestamp field, as occures with Defender tables. There are some important reasons for doing this that I will blog in the near future.

The function for expanding the raw data from a single, dynamic records column (as it arrives into ADX) and transforming it into the Log Analytics format is a little complex.

.create-or-alter function EventExpand {

EventRaw

| mv-expand events = records

| extend EventData = parse_xml(tostring(events.properties.RawXml))

| mv-apply Data = EventData.Event.EventData.Data on (

summarize ParameterXML = strcat_array(make_list(strcat("<Param>", tostring(Data['#text']), "</Param>")), "")

)

| mv-apply Data = EventData.Event.EventData.Data on (

summarize EventData = strcat(

"<DataItem><EventData xmlns=\"http://schemas.microsoft.com/win/2004/08/events/event\">",

strcat_array(

make_list(

iff(

isnotnull(Data['#text']),

strcat("<Data Name=\"", tostring(Data['@Name']), "\">", tostring(Data['#text']), "</Data>"),

strcat("<Data Name=\"", tostring(Data['@Name']), "\" />")

)

),

""

),

"</EventData></DataItem>"

)

)

| project

TenantId=tostring(events.properties.Tenant),

SourceSystem=tostring('AMA Agent'),

TimeGenerated=todatetime(now()),

Timestamp=todatetime(events.['time']),

Source=tostring(events.properties.PublisherName),

EventLog=tostring(events.properties.Channel),

Computer=tostring(events.properties.LoggingComputer),

EventLevel=toint(events.properties.EventLevel),

EventLevelName=tostring(events.level),

ParameterXml=tostring(ParameterXML),

EventData=tostring(EventData),

EventID=toint(events.properties.EventNumber),

RenderedDescription=tostring(events.properties.EventDescription),

AzureDeploymentID=tostring(''),

Role=tostring(''),

EventCategory=toint(events.properties.EventCategory),

UserName=tostring(events.properties.UserName),

Message=substring(tostring(events.properties.EventDescription), 0, indexof(tostring(events.properties.EventDescription), "\r\n")),

MG=tostring(''),

ManagementGroupName=tostring(''),

Type=tostring('Event'),

_ResourceId=tostring('')}

.alter table Event policy update @'[{"Source": "EventRaw", "Query": "EventExpand()", "IsEnabled": "False", "IsTransactional": true}]'

.alter table Event policy update @'[{"Source": "EventRaw", "Query": "EventExpand()", "IsEnabled": "True", "IsTransactional": true}]'

With the update policy enabled, I can watch all the events expected by the M-21-31 directive pour into my Azure Data Explorer cluster.

Azure Data Explorer is a columnar database. What really makes it special for storing enormous quantities of data is that unique strings in a column are only written once rather than being duplicated over and over again as is typical with logging. Because of this, the effective compression can be quite mind-boggling with a relatively small cluster being able to comfortably ingest hundreds of Gb a day.

From the perspective of Security Operations, the big data archive capability of ADX compliments the real-time alert and Incident management capabilities provided by Sentinel. While very specific, High Value events may be forwarded directly to Sentinel with Data Collection Rules, we can also ensure that the entire trove of event data is preserved for potential use with SOC hunting.

Solution Limitations

Microsoft state that "There are no plans to bring this to on-premises or Azure Arc scenarios" which is a big problem. It's also problematic that to use this capability the Event Hubs and data collection rules have to be in the same region as we dont want to have to deploy Event Hubs to every region we have a small number of machines. Using AMA to send events directly to storage incurs a hard-to-justify 50c per Gb (Australian) charge which will also mount up with the amount of data we are likely to ingest.

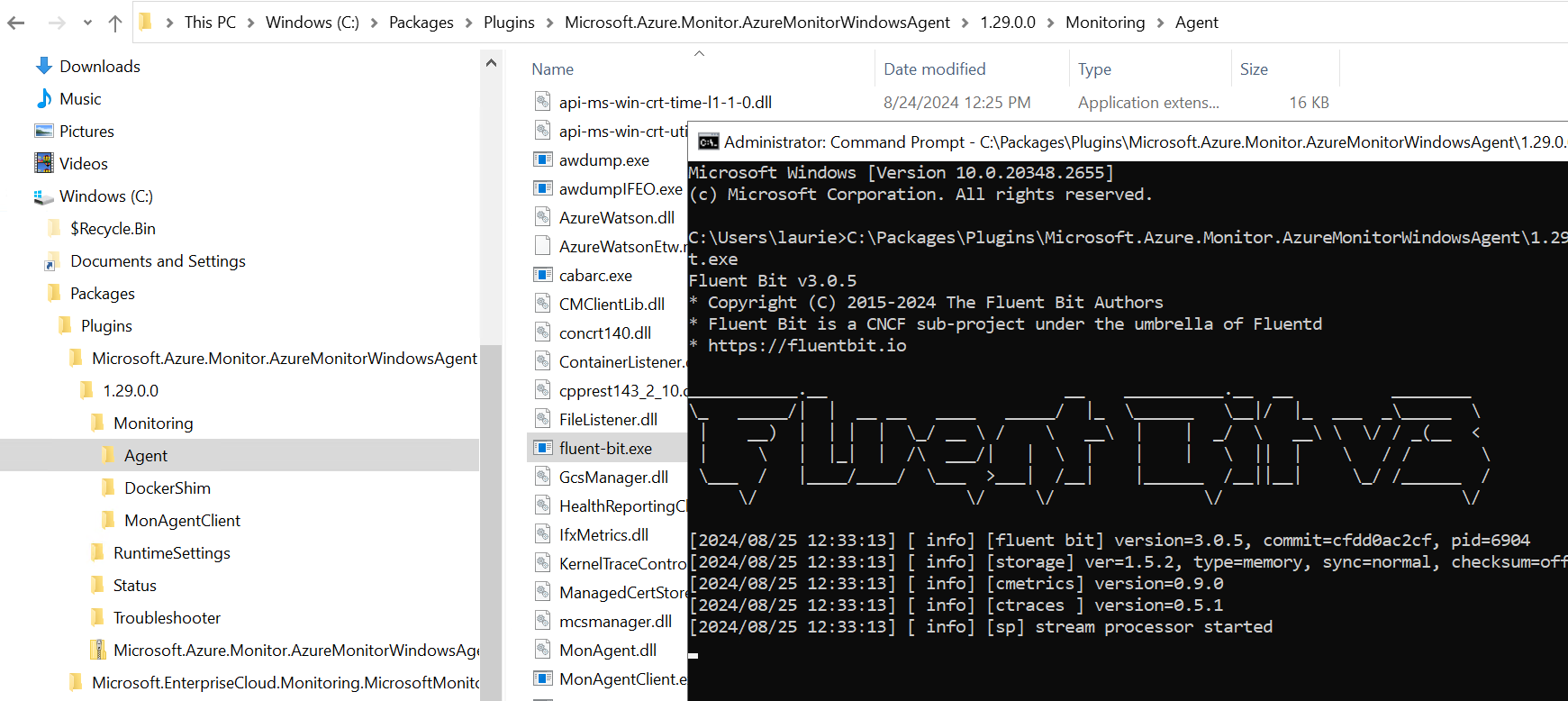

I am sure that Microsoft will evolve this early release capability. Until then, there is a usable workaround based on how Microsoft integrated Event Hub / Kafka data transfer wth the AMA agent. The client includes a version of the open source Fluent-bit client as part of the AMA client install.

Fluent-bit overcomes the current limitations of the AMA client and doesn't incure the per Gb data charge. It can be deployed against Azure ARC, on-prem or AWS hosted VMs to forward Events to Event Hub (Kafka).

Fluent-bit does need to have it's Kafka module compiled for use with Windows. I blogged about how to do this here. Otherwise, the creators of Fluent-bit (who are former Microsoft staff) do provide a commercially supported version with Windows Kafka support pre-compiled through Calyptia.

- Log in to post comments