Azure Event Hubs are a core component for large scale log collection for Security teams. They have a Kafka compliant Interface which provides broad capability with multiple systems. Most of Microsoft's major systems natively support log data writing to Event Hubs.

Event Hubs provide a resilience for Azure Data Explorer (ADX) that ensures that security events are preserved when there are issues or situation requiring the Azure Data Explorer cluster to be restarted. It also provides the capability to run dual ADX systems in paired regions with identical copies of data for Disaster Recovery purposes.

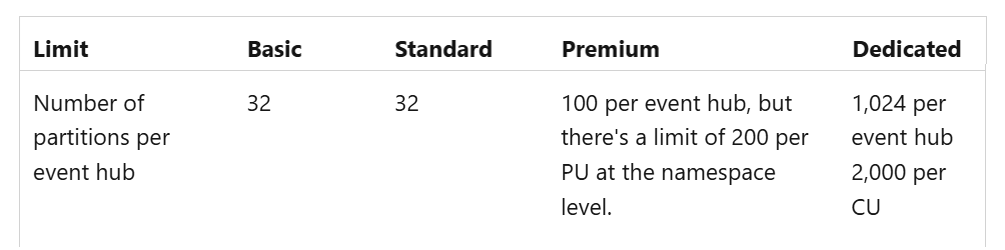

An enterprise environment is likely to use Premium SKU Event Hub namespaces to accomodate the volume of data ingest. What we have to be aware of with Premium SKU Event Hub pricing are the limitations on the number of partitions that may be used with a Processing Unit.

What are Event Hubs?

Event Hubs are message queues or Kafka Topics. Microsoft's technical documentation differentates between the "Event Hub Namespace" which is the Azure service and the Event Hubs that are the actual queues / topic. Marketing material doesnt always observe that difference so you will often see the namespace referred to as the Event Hub.

What are Partitions?

Imagine our Event Hub as a multi-lane highway. The highway itself represents the Event Hub, which is designed to handle a large volume of vehicles (or events) moving from one place to another. The lanes on the highway are the partitions. Each lane allows vehicles to travel independently and in parallel. Similarly, each partition in an Event Hub allows events to be processed independently and in parallel. The number of partitions allocated to an Event Hub determines the "peak hour" capacity for data movement through that particular Event Hub.

If we have a maximum of 200 lanes that can be shared between different highways – we need to understand which highways are most likely to get congested. The catch is that we have to know how many lanes to allocate to each road before it gets used. We can scale up and add lanes in the future but we can't scale down and remove lanes (without a massive amount of planning and additional work.

That's the analogy of what we have to understand with sizing the Event Hubs we create.

Data Monitoring KQL

Most Security teams are going to want to preserve Defender and Entra data long term with Azure Data Explorer. All of this data can be monitored with KQL so it's a good place to start for estimating partition counts for Event Hubs to be used with ADX.

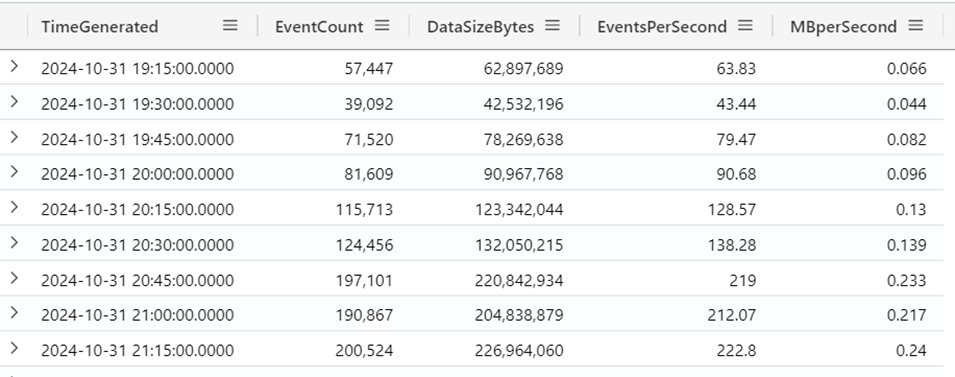

The KQL below will look at the past 24 hours of data in 15 minute intervals and let you see how many MB of data per second wuld typically flow through that event hub.

// Set analysis parameters

let SampleWindow = 15m; // 15-minute sampling window

let AnalysisPeriod = 24h; // Look back period

let StartTime = ago(AnalysisPeriod);

let EndTime = now();

// Calculate metrics per time block

let MetricsPerBlock =

DeviceEvents

| where TimeGenerated > StartTime and TimeGenerated <= EndTime

| summarize

EventCount = count(),

DataSizeBytes = sum(estimate_data_size(*))

by bin(TimeGenerated, SampleWindow)

| extend

EventsPerSecond = round(EventCount / (SampleWindow / 1sec), 2),

MBperSecond = round(DataSizeBytes / 1024 / 1024 / (SampleWindow / 1sec), 3);

MetricsPerBlock A partition has two limitations to be aware of:

- Ingress: Each partition can handle up to 1 MB per second or 1,000 events per second, whichever comes first.

- Egress: Each partition can handle up to 2 MB per second or 4,096 events per second.

The data output from the KQL shows that nothing with this data set is close to or exceeding 1MB per second, or 1,000 events per second. We can quite comforably say that a single partition on an Event Hub created for these events will address our data requirements.

We probably want to factor for at least 30% growth when sizing the partitions on Event Hubs.

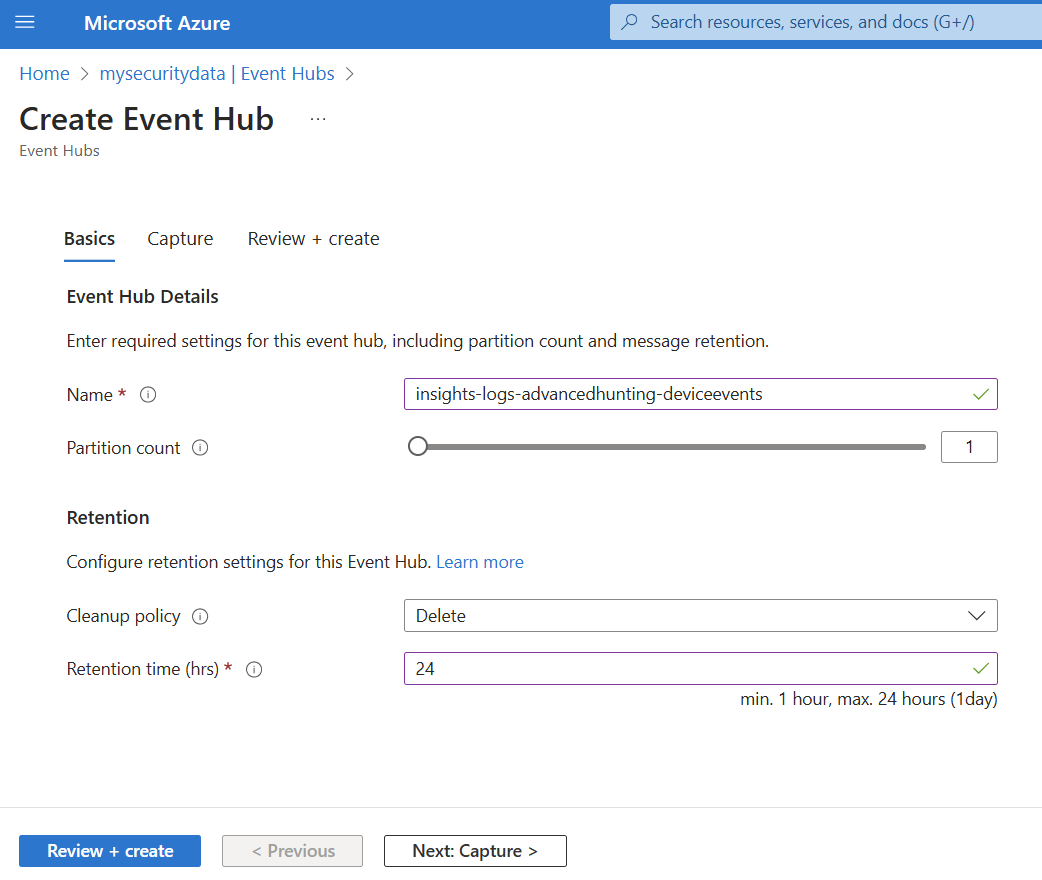

I can now go ahead and create my Event Hub.

TIP. You should probably adjust the KQL to sample at 5 minute intervals rather than the 15 minutes shown in this example if scoping a Production environment. Having a smaller sampling window ensures that true peaks in throughput aren't accidentally averaged away. However, with the Premium SKU on Event Hub namespaces you can bump up the number of partitions in response to load issues after the Event Hub has been created.

For reference, it's useful to use the same Event Hub names that would be automatically created by Microsoft when streaming data to event hubs. That current list (November 2024) is listed below.

- insights-logs-noninteractiveusersigninlogs

- insights-logs-provisioninglogs

- insights-logs-riskyusers

- insights-logs-userriskevents

- insights-logs-advancedhunting-alertevidence

- insights-logs-advancedhunting-alertinfo

- insights-logs-auditlogs

- insights-logs-advancedhunting-cloudappevents

- insights-logs-advancedhunting-deviceevents

- insights-logs-advancedhunting-devicefilecertificateinfo

- insights-logs-advancedhunting-devicefileevents

- insights-logs-advancedhunting-deviceimageloadevents

- insights-logs-advancedhunting-deviceinfo

- insights-logs-advancedhunting-devicelogonevents

- insights-logs-advancedhunting-devicenetworkevents

- insights-logs-advancedhunting-devicenetworkinfo

- insights-logs-advancedhunting-deviceprocessevents

- insights-logs-advancedhunting-deviceregistryevents

- insights-logs-advancedhunting-emailattachmentinfo

- insights-logs-advancedhunting-emailevents

- insights-logs-advancedhunting-emailpostdeliveryevents

- insights-logs-advancedhunting-emailurlinfo

- insights-logs-advancedhunting-identitydirectoryevents

- insights-logs-advancedhunting-identitylogonevents?

- insights-logs-advancedhunting-identityqueryevents

- insights-logs-managedidentitysigninlogs

- insights-logs-microsoftgraphactivitylogs

- insights-logs-serviceprincipalsigninlogs

- insights-logs-signinlogs

- insights-logs-advancedhunting-urlclickevents

- Log in to post comments