Pricing Microsoft's Big Data SIEM Capabilities

At under $3,000 AUD monthly, Azure Data Explorer can ingest and maintain 1TB of security data daily for 18 months — a cost-efficiency most security professionals overlook..

- Read more about Pricing Microsoft's Big Data SIEM Capabilities

- Log in to post comments

Sending Windows DNS Server logs to Azure Data Explorer

DNS logs are a critical resource for a SOC team. They provide forensics for understanding what has been done within an environment and they can be an early warning system for identifying malware and bad actors in the environment.

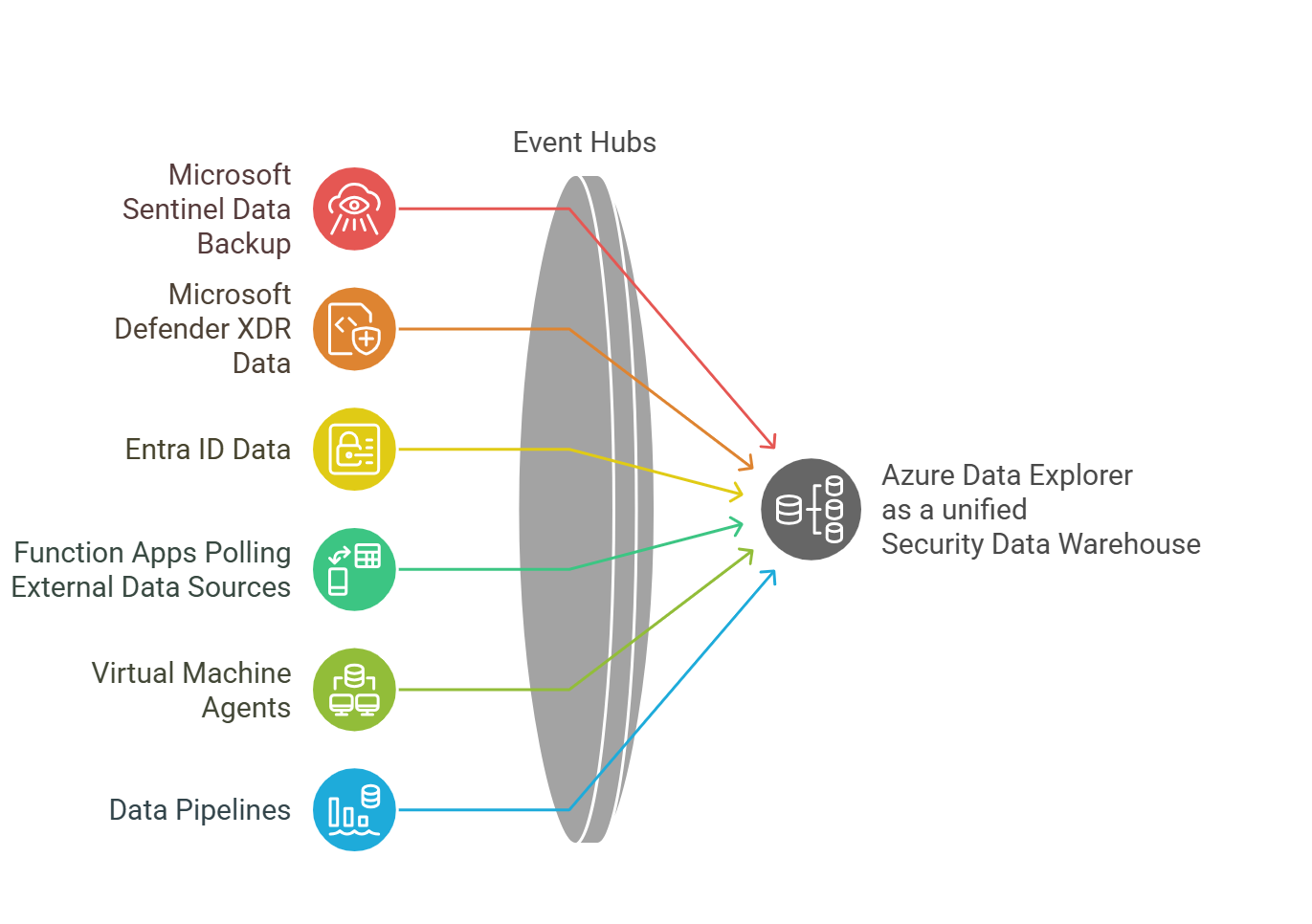

Azure Data Explorer - Security Data Warehouse: A Reference Implementation

The challenge of efficiently storing and analyzing massive volumes of security telemetry has long been a pain point for security operations teams.

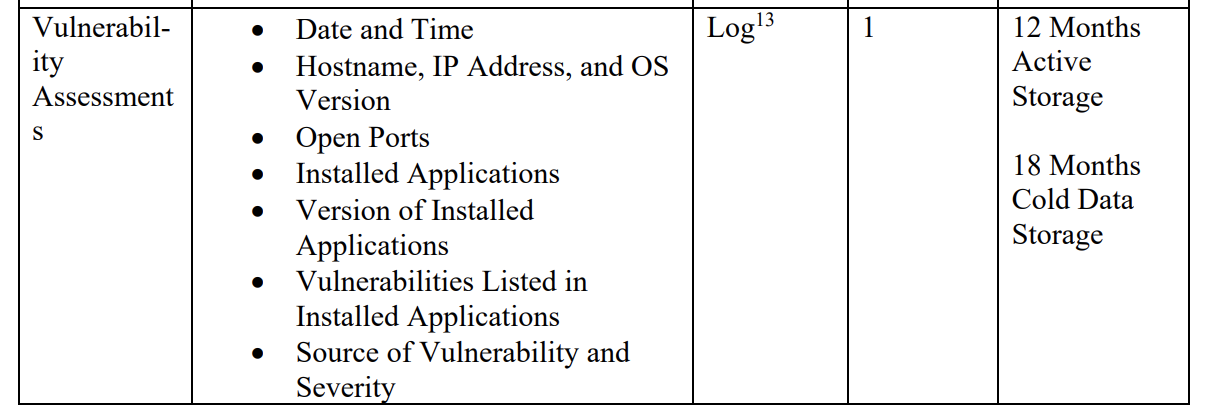

Collecting Defender Vulnerability Data - a PowerShell Core Durable Function

M-21-31 represents the current expectation for Federal Government Agencies in the United States and Australia.

PowerShell Core - Durable Functions - A Security Engineer's Introduction

With my current Security related projects I'm doing a lot of work using Azure's native automation capabilities. It's been a major surprise for me to realise that the wisdom of the majority of Security Providers is to argue for the purchase of XSOAR licenses to provide an automation capability with Microsoft Sentinel when all the tooling for automation has existed in Azure well before Sentinel was a product.

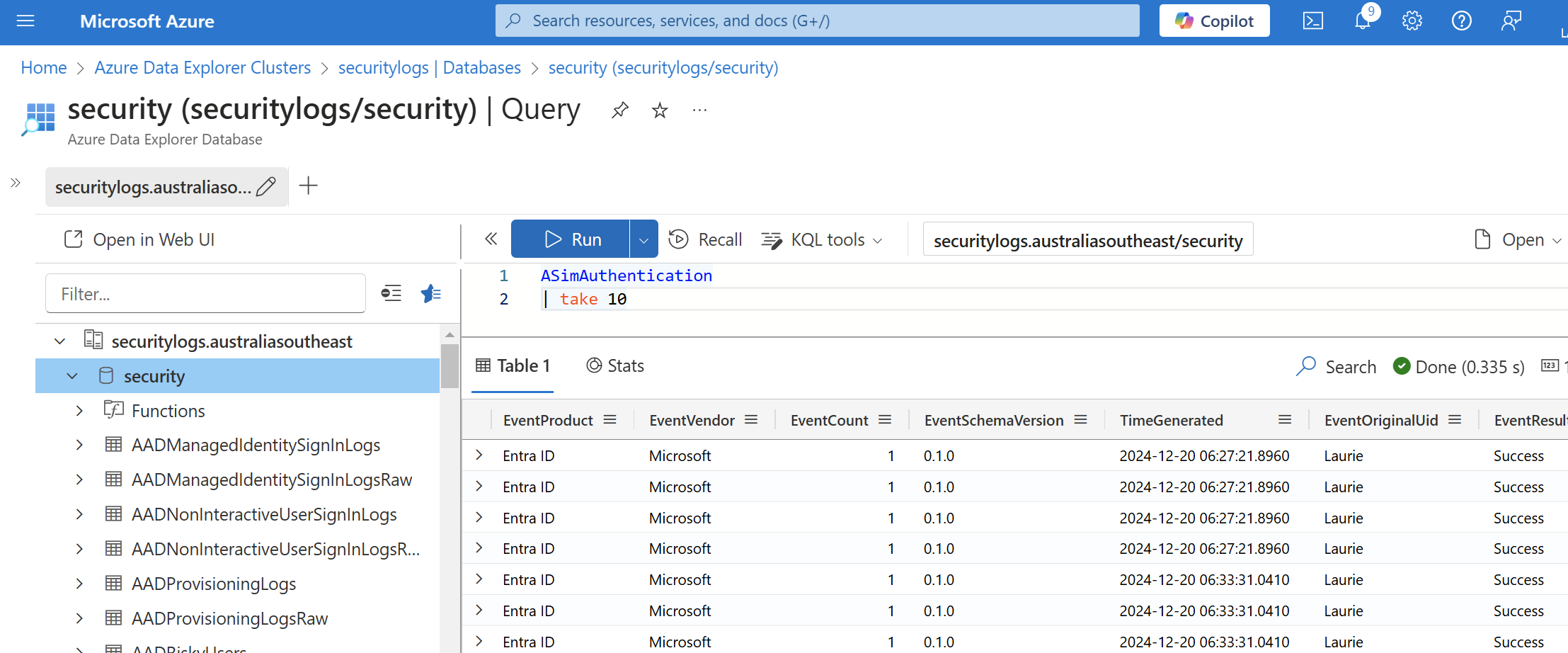

ASIM integration with Azure Data Explorer

I'm convinced that Security teams within every major organisation will be running Azure Data Explorer (ADX) clusters in the near future.

- Read more about ASIM integration with Azure Data Explorer

- Log in to post comments

AI Attack Simulation with Microsoft Sentinel

Very quietly, the last two weeks has seen the general availability of AI capability take another big step forward. Anthropic's announcement of support for Model Context Protocol with Claude Desktop provides wide access for Artificial Intelligence to utilise tools when asked to perform tasks.

- Read more about AI Attack Simulation with Microsoft Sentinel

- Log in to post comments

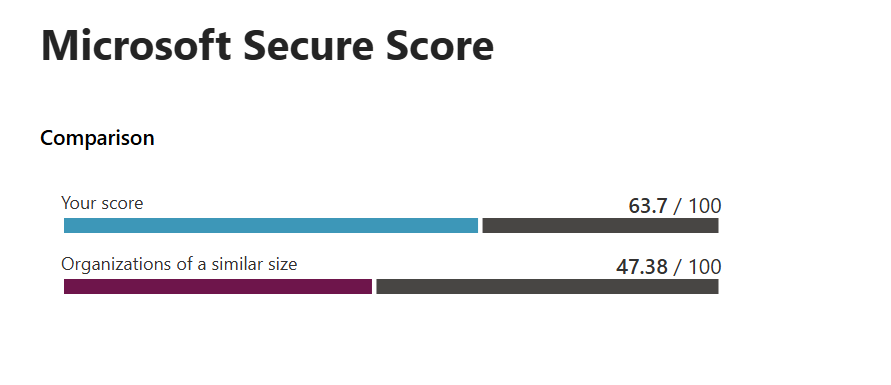

Getting Defender's 'Secure Score' with Logic Apps an ADX

This article is intended to show how Logic Apps can be used with Azure Data Explorer (ADX). Normally, I would try to ensure that all data ingested into ADX came through Event Hubs as they provide a resiliency and ability to support Regional redundancy with clusters.

Rethinking the role of Azure PowerShell Modules

In the early days of Azure, well before the arrival of Bicep, most engineers grappled with deployment automation. ARM templates were tough going and using PowerShell scripts seemed to be a useful alternative approach. We all learned that supporting a production environment based on hundreds of different functions that were continually changing with every version update was impossible. New Azure services required the newest versions of modules which broke existing deployment and support scripts. This scenario was a modern equivalent of "DLL hell".