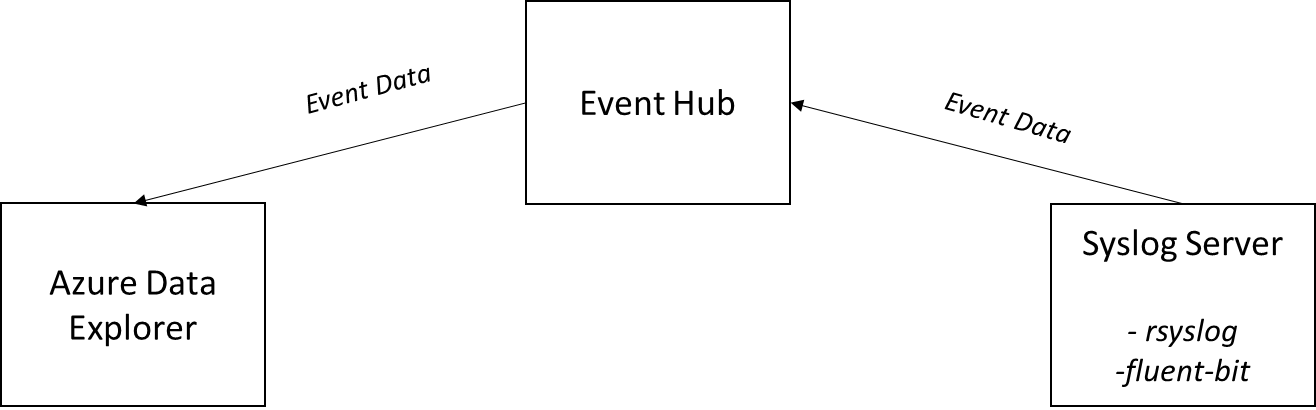

Security Operations require access to large volumes of historical syslog data to be able to trace incursions after an event has occurred. Azure Data Explorer (ASX) is ideal as a Sentinel integrated, low-cost system for archiving this data.

Microsoft's out-of-the-box approach for log forwarding is to use the Azure Monitor Agent with it's "Direct to Storage" capability to forward logs to ADX. In the fineprint this solution has problems as it isn't supported on machines residing outside of Azure and there is a per-Gb data charge (50 cents per Gb in Australia) that makes AMA an expensive option with syslog.

Open Source Options

Two significant open source projects should be considered as alternative methods for forwarding syslog data to ADX.

The rsyslog project has native capabilities for writing directly to Event Hubs. is the default syslog server with most Linux distributions, it’s written in C and has multi-threaded support for writing massive volumes of data to Event Hubs quickly. It also has a great process for customising messages using templates to align fields to what is expected from Microsoft Sentinel. The omazureeventhubs module does require compiling from source though which can be a support issue when handing a solution over to DevOps teams.

The fluent-bit project also has enormous industry usage. It is also written in C and supports directly writing to Event Hubs with it's native Kafka output module. As an agent, the Kakfa agent requires compiling for deployment on Windows machines but Kafka support exists with it's default package on Linux. Typically it's filtering with syslog uses RegEx which can result in messages that aren't parsed properly being dropped and there is an additional overhead of post-processing to align facility and severity messaging aspects to the fields expected by Microsoft Sentinel. There is also a problem with fluent-bit's use of the at character '@' as a field name when working with time. This is an illegal character for working with ADX data input.

The two projects work extremely well together with fluent-bit developed to work directly with rsyslog.

By using both projects together, Rsyslog provides a method to control syslog message formatting that avoids the issues of fluent-bit, RegEx and illegal characters with ADX while using fluent-bit as the transport avoids asking DevOps teams to stay on top of C compiling as a matter of BAU support and some of the minor quirks that exist in the rsyslog Event Hubs module. As both projects are written in C they work together as an extremely fast and lightweight solution for getting large volumes of logs from syslog to ADX.

Deploying the Solution

The forwarding of syslog data requires working on three separate systems.

- The syslog server itself requires rsyslog and fluent-bit installed. Both services will need to be configured to format and forward data to a dedicated Event Hub (Kafka topic).

- An Event Hub will need to be configured to receive data from fluent-bit. This will need to be created first to use topic / Event hub details and keys in the fluent-bit configuration on the syslog server.

- Azure Data Explorer will require a data connection, mapping and tables created for storing the syslog data.

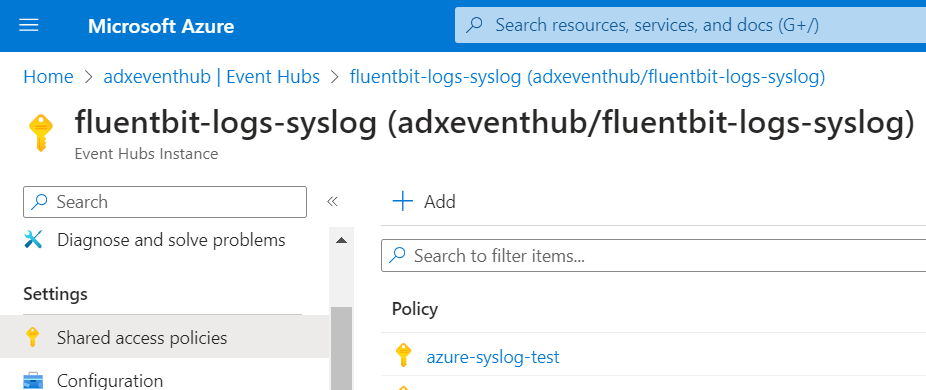

Event Hub Configuration

Presuming you have an Event Hub Namespace created, create a dedicated Event Hub for the fluent-bit syslog data stream. You also need to create a dedicated shared access policy to allow the fluent-bit client to send data to this Event Hub.

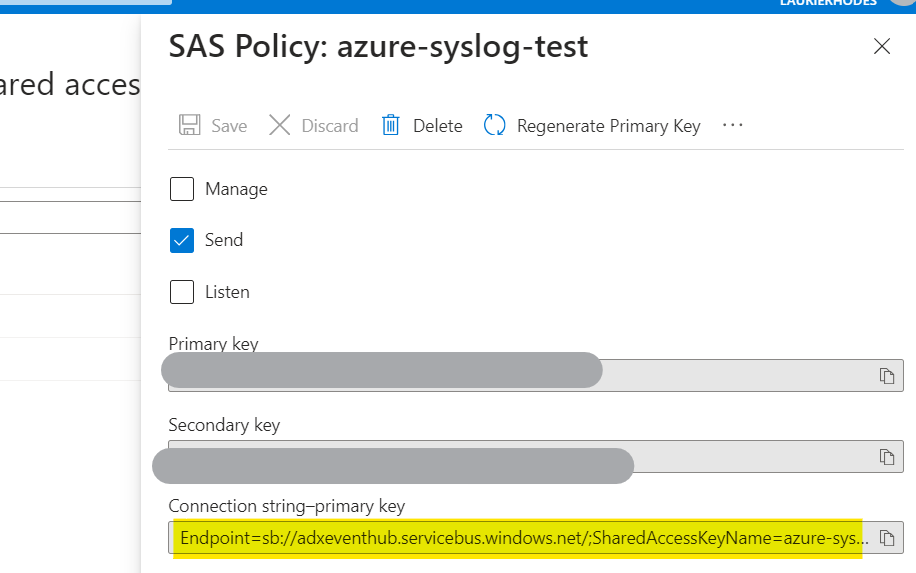

In the details of the Shared Access Signature policy, ensure that the only permission granted is to send data and copy the connection string. This will provide the details needed for the fluent-bit configuration on the syslog machine.

Syslog server - fluent-bit configuration

Presuming that rsyslog and fluent-bit have been installed as packages on the syslog server, edit the fluent-bit configuration file:

vi /etc/fluent-bit/fluent-bit.confI am going to use fluent-bit's inbuilt syslog server as the data source of messages. This isn't going to be exposed to the world, just accessible from rsyslog on the same machine.

[INPUT]

Name syslog

Parser json

Listen 0.0.0.0

Port 5140

Mode tcp

[OUTPUT]

Name kafka

Match *

brokers adxeventhub.servicebus.windows.net:9093

topics fluentbit-logs-syslog

rdkafka.security.protocol SASL_SSL

rdkafka.sasl.username $ConnectionString

rdkafka.sasl.password Endpoint=sb://adxeventhub.servicebus.windows.net/;SharedAccessKeyName=azure-syslog-test;SharedAccessKey=XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX=;EntityPath=fluentbit-logs-syslog

rdkafka.sasl.mechanism PLAIN

Note that as a parser, I'm avoiding using the standard RegEx parsers that are shown in standard fluent-bit documentation as I'm going to use rsyslog for parsing messages and formatting the output into JSON. The json parser is an inbuilt parser that comes with all standard distributions for fluent-bit.

Azure Event Hubs are Kafka compliant services. The configuration above needs to match the details you have copied from your Event Hub.

Syslog server - rsyslog configuration

I am going to create a dedicated rsyslog template for forwarding messages to the fluent-bit server.

vi /etc/rsyslog.d/fluentbit.confThis template is going to do three things.

- It will normalise the received timestamp into "Zulu" time. I discovered inconsistent timestamp formatting can be a reason for fluent-bit to drop messages with its parsing. Ultimately I want all events recorded with Zulu time in ADX.

- It is going to take standard syslog message files and write them as json using the Microsoft field names used with Sentinel. I want all the data that goes to ADX to be queried from Sentinel as if it was part of the typical Syslog Azure Monitor table and leveraging rsyslog's translation capability for this keeps data import easy later in the process.

- The "omfwd" action simply passes my newly formatted message from rsyslog to fluent-bit.

# Use a template for constructing a UTC date time format for the

# originating message

template(

name = "Syslog_DateFormat"type = "list") {

property(name="timestamp" dateformat="year" date.inUTC="on")

constant(value="-")

property(name="timestamp" dateformat="month" date.inUTC="on")

constant(value="-")

property(name="timestamp" dateformat="day" date.inUTC="on")

constant(value="T")

property(name="timestamp" dateformat="hour" date.inUTC="on")

constant(value=":")

property(name="timestamp" dateformat="minute" date.inUTC="on")

constant(value=":")

property(name="timestamp" dateformat="second" date.inUTC="on")

constant(value=".")

property(name="timestamp" dateformat="subseconds" date.inUTC="on")

constant(value="Z")

}

# this template formats standard syslog properties as into JSON lines

template(name="SentinelSyslogFormat" type="list" option.jsonf="on") {

property(outname="EventTime" name="$!sntdate" format="jsonf")

property(outname="HostName" name="hostname" format="jsonf")

property(outname="ProcessID" name="procid" format="jsonf")

property(outname="ProcessName" name="syslogtag" format="jsonf")

property(outname="Facility" name="syslogfacility-text" format="jsonf")

property(outname="SeverityLevel" name="syslogseverity-text" format="jsonf")

property(outname="SyslogMessage" name="msg" format="jsonf")

}

# Construct the GMT date format from the message

set $!sntdate = exec_template("Syslog_DateFormat");

action(type="omfwd" Target="127.0.0.1" queue.type="LinkedList" Port="5140" Protocol="tcp" template="SentinelSyslogFormat")

Both fluent-bit and rsyslog need to be restarted for the new configurations to take effect.

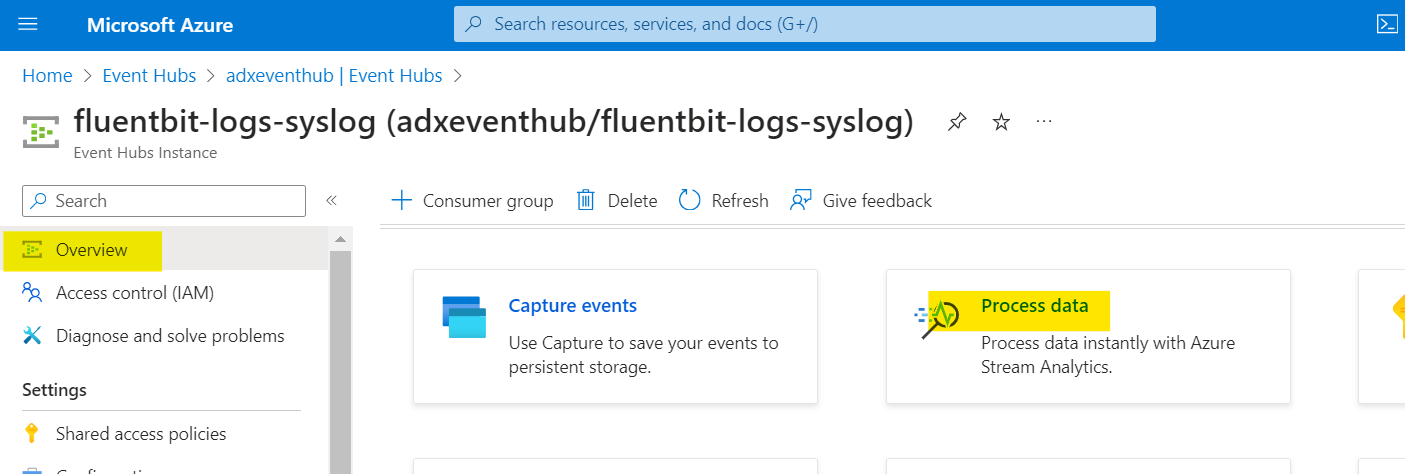

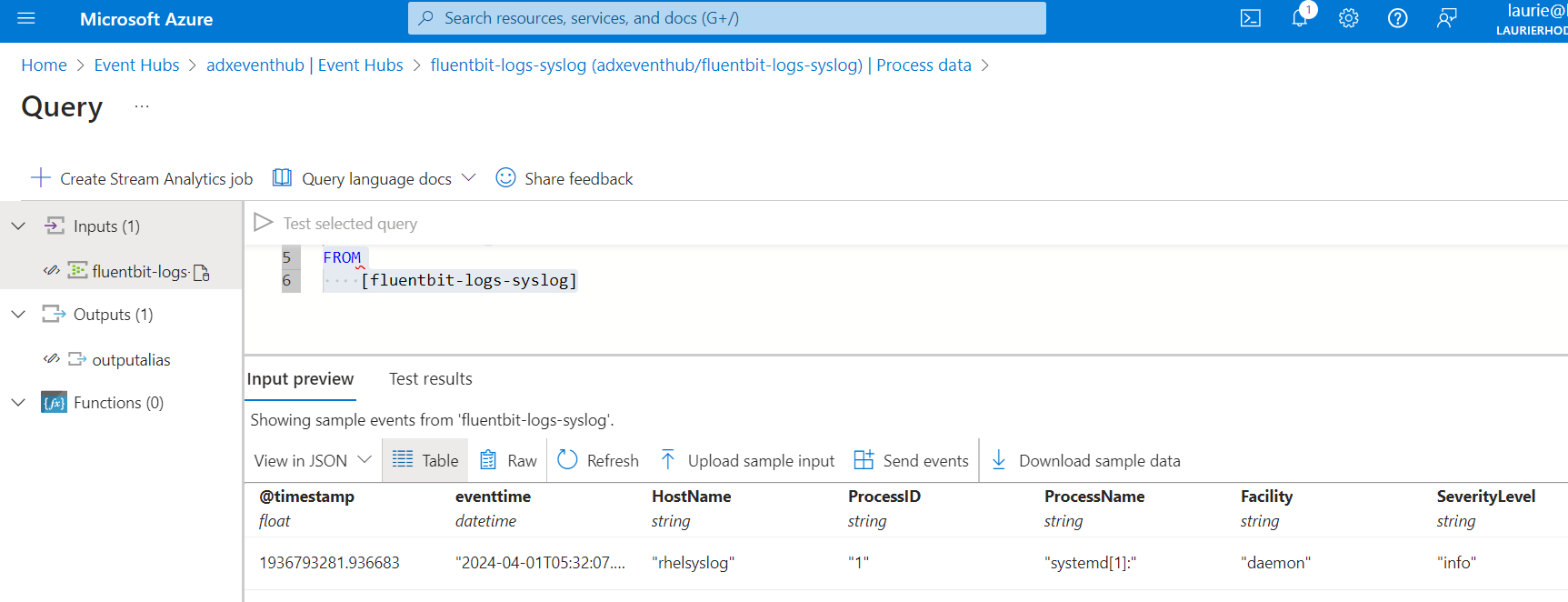

Back on my Event Hub, I can start a Stream Analytics job to watch the first event messages start coming in. This may take a few minutes.

Under the Process Data option off the Event Hub Overview page you can enable real-time insights.

As data arrives it will be populated in a table form. To make things easy for creating the Raw table feed in ADX, I'll toggle the data feed to "Raw" and copy one of the messages.

The random message I copied is shown below. I wont be able to use the fluent-bit '@timestamp' files with ADX because the '@' symbol makes in an illegal property name but I can use the new eventtime field for the same purpose.

{

"@timestamp": 2878414530.878304,

"eventtime": "2024-04-01T19:02:40.4745140Z",

"HostName": "rhelsyslog",

"ProcessID": "-",

"ProcessName": "kernel:",

"Facility": "kern",

"SeverityLevel": "info",

"SyslogMessage": "BIOS-e820: [mem 0x000000003ffff000-0x000000003fffffff] usable",

"EventProcessedUtcTime": "2024-04-01T21:50:55.2153543Z",

"PartitionId": 0,

"EventEnqueuedUtcTime": "2024-04-01T19:02:54.4490000Z"

}

ADX - fluent-bit raw data ingestion

With Azure Data Explorer I need to create a transitory table for receiving raw daya streams from my Event Hub. This will be done by enabiling a data connector. The first task is to create the "Raw" table and a mapping file to tell ADX how to map the data from the incoming record into the fields of my table. I know what the raw message files are going to be from the json object I just copied from Stream Analytics on my Event Hub.

Raw table

In the query window of my ADX database, I create my table for Raw data.

.create-merge table FluentBitSyslogRaw (

eventtime: string,

HostName: string,

ProcessID: int,

ProcessName: string,

Facility: string,

SeverityLevel: string,

SyslogMessage: string

)At this stage, I dont care too much about data types. The data is being ingested as part of a json file so it is likely to just be text or integers (values aren't encased with quote marks). This data will be transformed into it's correct data types later so just using strings on all values at the moment is absolutely fine.

Ingestion Mapping

I need to create a mapping so that I can create a data connector between the Event Hub and my ADX table and the data connector can know how the data stream needs to be written to the right table. The mapping uses the dollar sight "$" to represent a record being processed and maps the propery on that object to the column name I have specified.

.create-or-alter table FluentBitSyslogRaw ingestion json mapping 'FluentBitSyslogRawMapping' '[{"column":"eventtime","Properties":{"path":"$.eventtime"}},{"column":"HostName","Properties":{"path":"$.HostName"}},{"column":"ProcessID","Properties":{"path":"$.ProcessID"}},{"column":"ProcessName","Properties":{"path":"$.ProcessName"}},{"column":"Facility","Properties":{"path":"$.Facility"}},{"column":"SeverityLevel","Properties":{"path":"$.SeverityLevel"}},{"column":"SyslogMessage","Properties":{"path":"$.SyslogMessage"}}]'

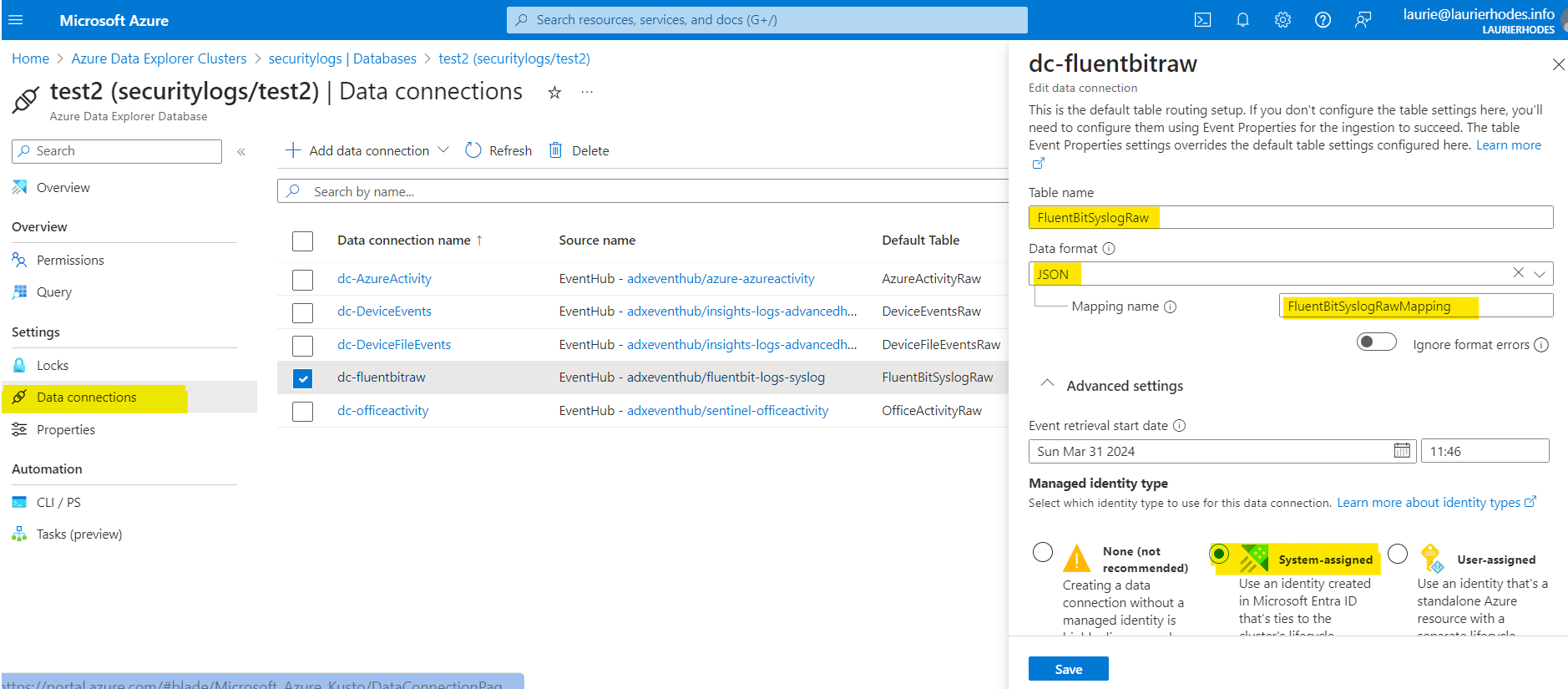

Data Connection Creation

ADX data connections are created off the database blade with ADX. As I create an Event Hub data connection I'm required to specify the Event Hub I'm connecting to.

As part of the data connection I specify that I want incoming data to be written to the FluentbitSyslogRaw table using the mapping file I've just created.

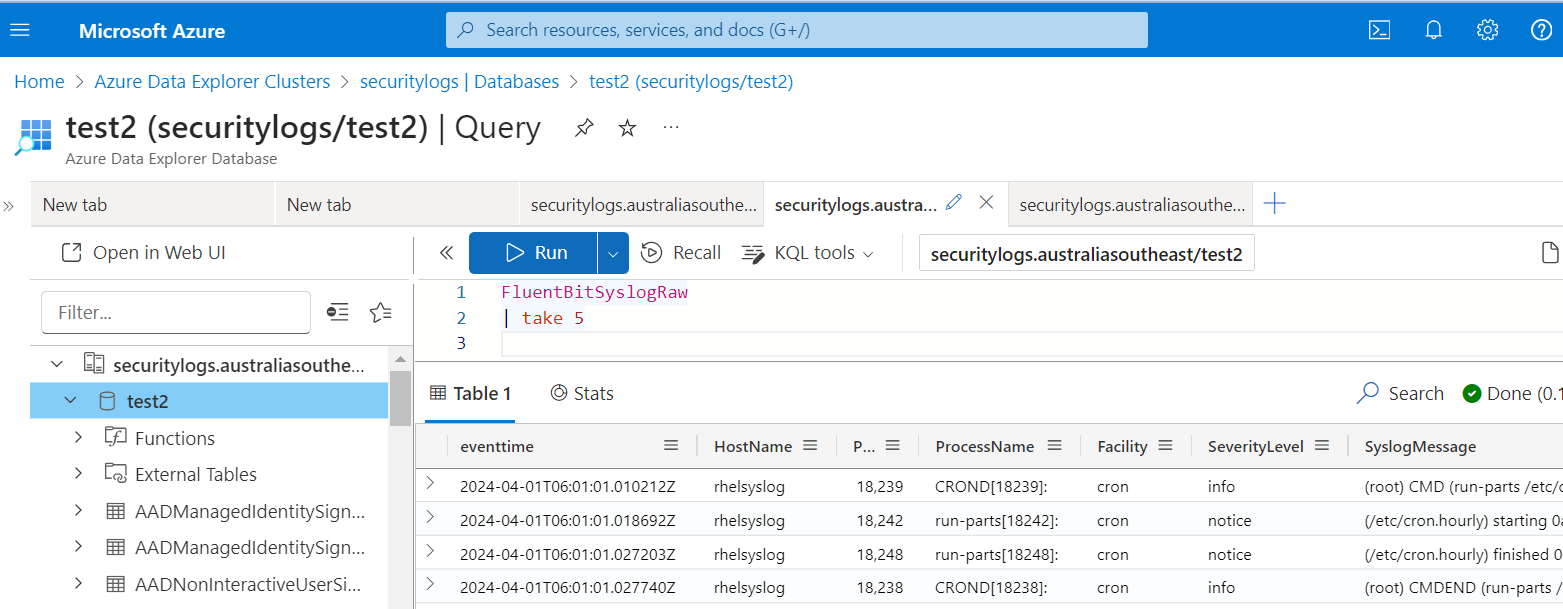

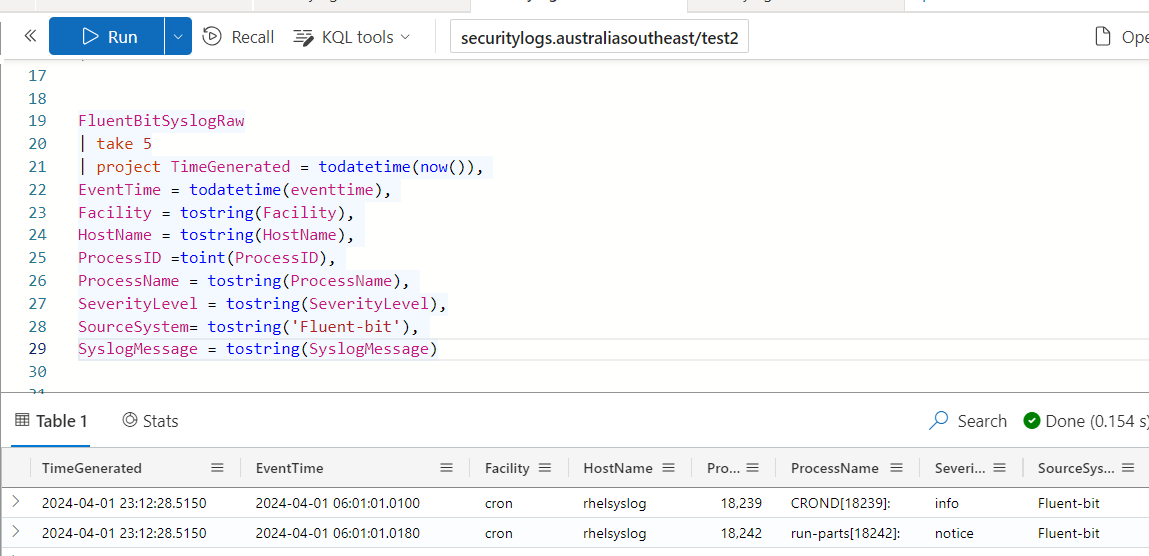

Testing the Raw data feed

With the data connector enabled, I can now query the Raw log feed using a KQL query.

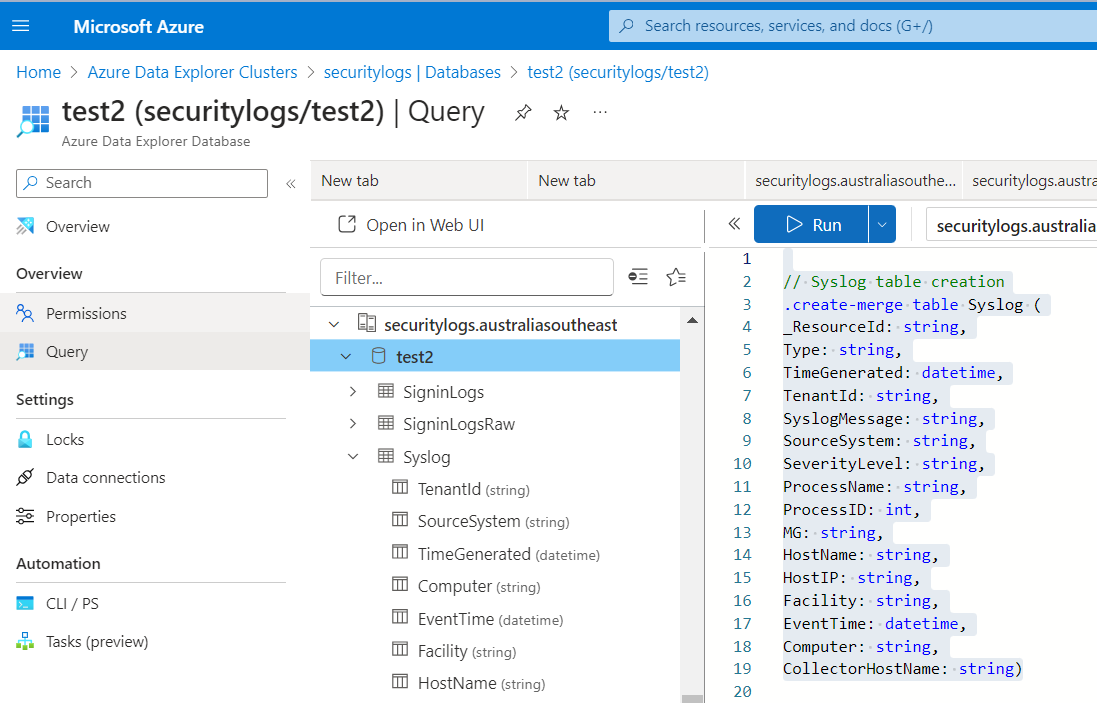

The last step is to create the final Syslog table in ADX to mirror what I would see in Sentinel.

Syslog table creation

To get my Syslog table looking the same as the Syslog table in Sentinel, I'll use Microsoft's published schema for creating the right field data types. The schema can be referenced here:

https://learn.microsoft.com/en-us/azure/azure-monitor/reference/tables/syslog

Take care to note any fields that aren't strings!

The KQL must be run within the query window of my database.

// Syslog table creation

.create-merge table Syslog (

TenantId: string,

SourceSystem: string,

TimeGenerated: datetime,

Computer: string,

EventTime: datetime,

Facility: string,

HostName: string,

SeverityLevel: string,

SyslogMessage: string,

ProcessID: int,

HostIP: string,

ProcessName: string,

MG: string,

CollectorHostName: string,

Type: string,

_ResourceId: string

)The new table will become visible from the ADX query blade on my database.

Using KQL I can test a query that will project the data from my Raw table feed into the Syslog table format. KQL is case sensitive and here I spot a mistake that I'm forwarding 'eventtime' from rsyslog as a field name that needs to use an uppercase "E". For production I'll want to go back and change that with my rsyslog template but it serves as a good representation of how projecting the fields from the Raw table into the format in the final destination table works!

Now i know that I have a query that works, I will save it as a function that can be used automatically with data ingestion into the Syslog table.

.create-or-alter function FluentBitSyslogExpand {

FluentBitSyslogRaw

| project

TenantId = tostring(''),

SourceSystem= tostring('Fluent-bit'),

TimeGenerated = todatetime(now()),

Computer = tostring(''),

EventTime = todatetime(eventtime),

Facility = tostring(Facility),

HostName = tostring(HostName),

SeverityLevel = tostring(SeverityLevel),

SyslogMessage = tostring(SyslogMessage),

ProcessID =toint(ProcessID),

HostIP = tostring(''),

ProcessName = tostring(ProcessName),

MG = tostring(''),

CollectorHostName = tostring(''),

Type = tostring(''),

_ResourceId = tostring('')

}There are a number of fields in the Syslog table that aren't going to be populated by my fluent-bit process. I account for these by passing blank values which prevents ADX creating an error based on my function creating a different number of fields to the table schema.

I can now set the policy on my Syslog table to updae when data flows into the FluentBitSyslogRaw table - and use my function to expand / project values into the Syslog table.

.alter table Syslog policy update @'[{"Source": "FluentBitSyslogRaw", "Query": "FluentBitSyslogExpand()", "IsEnabled": "True", "IsTransactional": true}]'

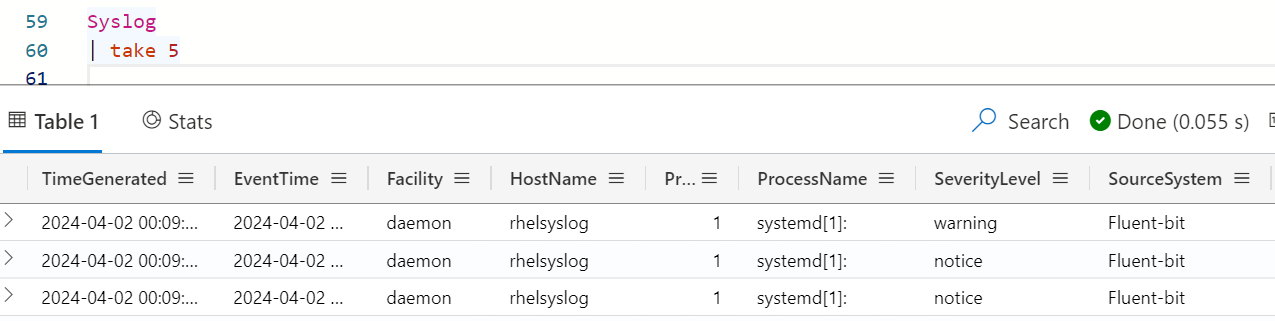

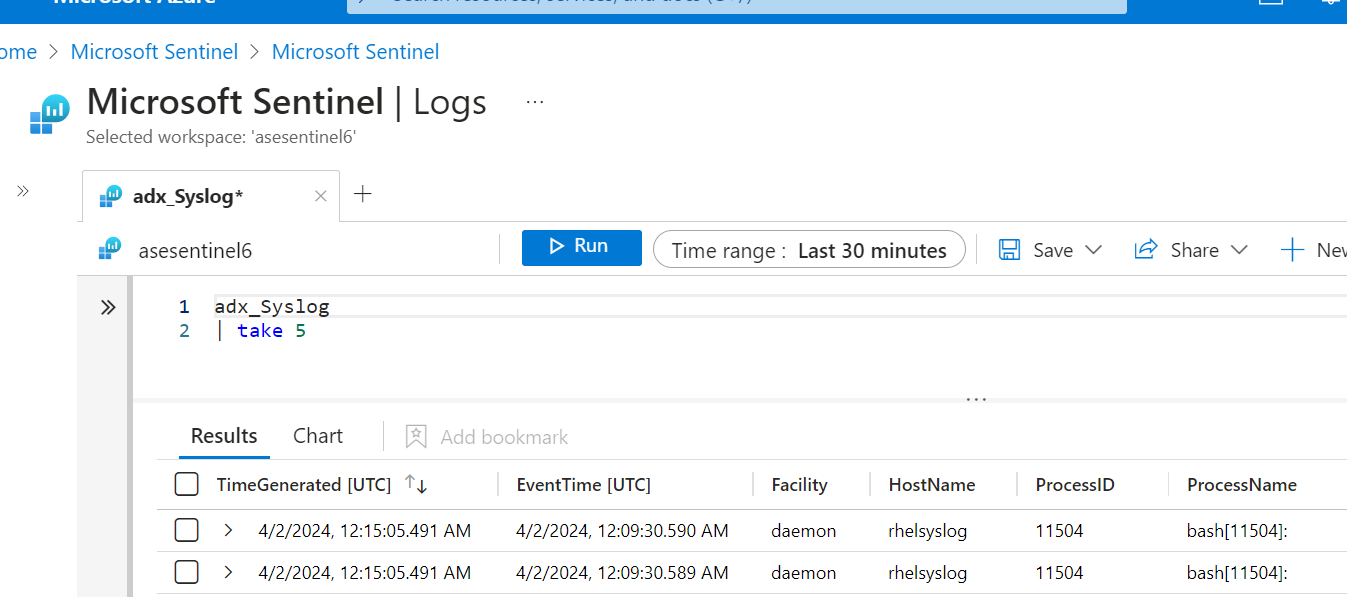

After a few minutes querying the Syslog table in ADX will appear the same

This will ultimately allow a vast amount of information to be queried through Microsoft Sentinel using custom functions for including ADX data within Sentinel queries.

- Log in to post comments